Efficient Python Docker Image from any Poetry Project

A generic Dockerfile starting point to build production-ready Python Docker Images from your Poetry Environment

Photo by Ian Taylor on Unsplash

Need to pack your python project into a docker container? Using poetry as a package manager? Then the Dockerfile here below can be a starting point for creating a small, efficient image out of your poetry project, with no additional operations to perform.

Every Python Project be like

Successful ML projects often produce models or heuristic algorithms that solve a particular problem. Once ready, these models need to move out from those fancy Jupyter Notebooks and to be actually delivered to whom needs them, and the most efficient way is via docker images. This is my recurrent use case, but this applies to any python project, regardless if it contains ML or not.

Poetry

Talking about environments, I recently discovered (and really liked) Poetry in a way that I probably will stick with it for all my next projects.

From Poetry to Docker

In my recent project, as soon as I started thinking of serving the logic through an API I surfed the internet looking for poetry+docker best practices. The only examples that I was able to find were Dockerfiles with a python base image installing poetry and then installing the environment from the container with poetry.

I want it (sm)all

What I don’t like of this approach is that, even if with poetry we can install only the necessary runtime dependencies leaving aside the development ones, we still have an image with poetry installed, which is a waste of space! I do not want poetry on my production image since I won’t develop on it, I want a small, efficient image.

At the same time, I still want to have poetry’s developer experience while developing my project, I do not want to keep track of my dependencies on a requirements.txt file, nor to have to generate it every once in a while and copy it inside the docker image.

About building efficient python docker images, I found a couple of interesting blog posts from pythonspeed.com (the whole blog seems to be worth a read):

My Dockerfile

This solution leverages docker multi-stage builds. Comments directly in it!

#

# Build image

#

FROM python:3.9-slim-bullseye AS builder

WORKDIR /app

COPY . .

RUN apt update -y && apt upgrade -y && apt install curl -y

RUN curl -sSL https://raw.githubusercontent.com/python-poetry/poetry/master/get-poetry.py | python -

RUN $HOME/.poetry/bin/poetry config virtualenvs.create false

RUN $HOME/.poetry/bin/poetry install --no-dev

RUN $HOME/.poetry/bin/poetry export -f requirements.txt >> requirements.txt

#

# Prod image

#

FROM python:3.9-slim-bullseye AS runtime

RUN mkdir /app

COPY src /app

COPY --from=builder /app/requirements.txt /app

RUN pip install --no-cache-dir -r /app/requirements.txt

As you can see, the builder stage is in charge of recreating the whole poetry environment and to export the required runtime dependencies in a requirements.txt file for us.

The runtime stage starts from another clean and slim base image, and uses just the resulting requirements.txt generated in the previous stage. In this way we managed to avoid a Poetry installation.

Notice that this image has no entrypoint or starting command, as it is intended to be generic and not framework specific. As a common example, if we have a flask application and want to run it in production with gunicorn, we should add the following lines to the runtime stage:

EXPOSE 8000

CMD ["gunicorn", "-b", "0.0.0.0:8000", "app:application", "--timeout", "120"]

Same results, half of space

Just to confirm my assumptions, I created a basic project with the following dependencies:

- Flask

- Pandas

- Numpy

- requests

Here below the pyproject.toml file:

[tool.poetry]

name = "flask-boilerplate"

version = "0.1.0"

description = "Flask Boilerplate"

authors = ["denisbrogg <twitter.com/denisbrogg>"]

[tool.poetry.dependencies]

python = "^3.9"

Flask = "^2.1.2"

gunicorn = "^20.1.0"

numpy = "^1.22.4"

pandas = "^1.4.2"

requests = "^2.27.1"

[tool.poetry.dev-dependencies]

pytest = "^5.2"

[build-system]

requires = ["poetry-core>=1.0.0"]

build-backend = "poetry.core.masonry.api"

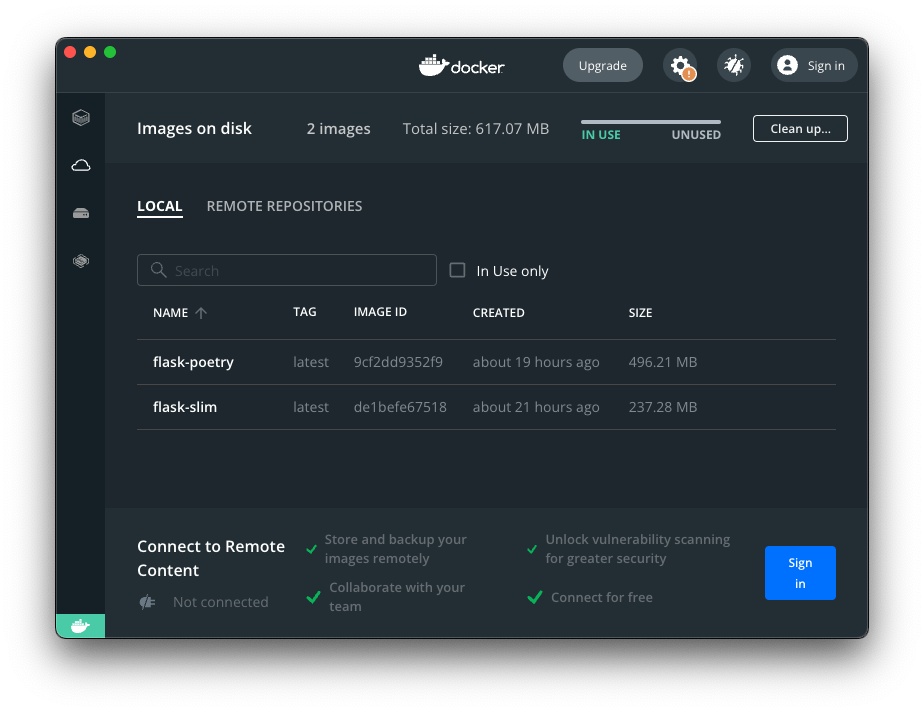

Then I created two docker images out of it, flask-poetry which includes only the builder stage and flask-slim which executes both builder and runtime stages. You can see that flask-slim is less than half of the dimension of the builder image!

Conclusion

The primary goal should be to have the most minimal docker image possible, so that we can improve portability, security, efficiency, build times. In this sense multi-stage build lets us use the best of both worlds: use poetry for development and environment portability while creating minimal python docker images with just the necessary dependencies installed.

Thanks for reading!